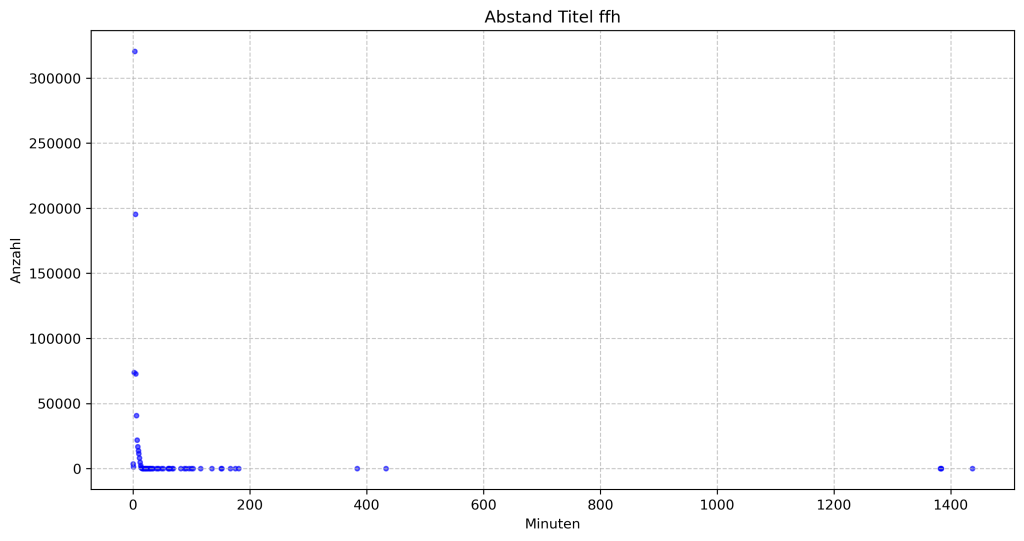

Die Frage, ab wann „Last Christmas“ von „WHAM!“ das erste Mal im Jahr gespielt wird, lässt sich gar nicht so pauschal beantworten, wie ich es bei der Q&A vielleicht versucht hatte, darzustellen. Der Hintergrund davon ist, dass die Sender gerne aus Gag oder aus einem anderen Zusammenhang den Song auch schon im März oder Juli bereits „zum ersten Mal in diesem Jahr“ spielen:

| Sender | 2016 | 2017 | 2018 | 2019 | 2020 | 2021 | 2022 | 2023 | 2024 |

| 1Live | 23.12. | 07.12. | 22.12. | 24.09. | 07.08. | ||||

| MDRSputnikLive | 24.12. | 01.04. | 24.12. | ||||||

| absolutehot | 23.11. | ||||||||

| antennemv | 23.12. | 29.11. | |||||||

| antenneniedersachsen | 25.10. | 06.04. | 27.11. | 02.12. | 24.10. | ||||

| bayern3 | 02.12. | 06.01. | 22.03. | 24.06. | 24.08. | 03.06. | |||

| bigfm | 17.12. | 09.12. | 08.12. | 07.12. | 25.03. | 14.03. | 06.12. | ||

| bigfmsaar | 09.12. | 08.12. | 07.12. | 25.03. | 06.12. | ||||

| bremennext | 14.03. | ||||||||

| bremenvier | 08.11. | 19.01. | 28.10. | 25.11. | 14.03. | 25.11. | |||

| dasding | 05.04. | 06.03. | 01.04. | 11.11. | 31.03. | 26.04. | |||

| egofm | 15.12. | 14.12. | 24.12. | 23.12. | 12.12. | 03.12. | |||

| ffh | 26.11. | 22.02. | 15.09. | 09.04. | 24.11. | 01.12. | 01.05. | ||

| ffn | 01.12. | 23.04. | 28.11. | 27.11. | 27.11. | 10.04. | 01.04. | ||

| hitradiortl | 24.12. | 26.11. | 27.11. | 24.10. | |||||

| hr3 | 19.12. | 07.08. | 24.11. | 10.11. | 03.06. | ||||

| jamfm | 23.09. | 26.11. | 16.12. | 14.12. | |||||

| kissfm | 02.12. | 24.12. | 27.08. | ||||||

| mdrjump | 29.11. | 27.11. | 26.11. | 21.11. | 27.11. | 29.11. | |||

| ndr2 | 03.12. | 05.09. | 29.11. | 24.11. | 24.11. | 25.11. | 03.01. | 24.03. | |

| njoy | 21.04. | 11.04. | 04.04. | 16.04. | 08.04. | 30.03. | |||

| planet | 17.12. | 15.12. | 11.12. | 03.12. | 29.11. | 07.09. | 05.11. | ||

| puls | 18.12. | 04.12. | 21.06. | 09.11. | 07.11. | 24.12. | |||

| radio91.2 | 15.09. | 24.08. | 07.11. | 15.11. | |||||

| rpr1 | 21.04. | 01.12. | 22.10. | 13.01. | 28.11. | 01.12. | |||

| rsh | 22.12. | 13.04. | 17.04. | 10.04. | 30.03. | ||||

| rtl | 30.11. | 25.11. | 27.11. | 25.11. | |||||

| spreeradio | 29.11. | 28.11. | 27.11. | 25.11. | |||||

| sr1 | 26.11. | 03.05. | 10.11. | 01.05. | |||||

| swr3 | 24.07. | 24.01. | 30.05. | 24.11. | 20.02. | 16.01. | |||

| top40 | 29.04. | 24.11. | 11.11. | ||||||

| unserding | 15.02. | 11.11. | |||||||

| wdr2 | 20.12. | 21.03. | 19.11. | 25.06. | 16.01. | ||||

| youfm | 23.12. | 03.04. | 16.07. | 25.11. | 20.02. | 03.12. |

Und hier zum Vergleich, wenn man ab dem 01.10. des jeweiligen Jahres erst zählt:

| Sender | 2016 | 2017 | 2018 | 2019 | 2020 | 2021 | 2022 | 2023 | 2024 |

| 1Live | 23.12. | 07.12. | 22.12. | 17.11. | 11.10. | ||||

| MDRSputnikLive | 24.12. | 24.12. | |||||||

| absolutehot | 23.11. | ||||||||

| antennemv | 23.12. | 29.11. | |||||||

| antenneniedersachsen | 25.10. | 27.11. | 02.12. | 24.10. | |||||

| bayern3 | 02.12. | 13.11. | 13.10. | 24.10. | 24.11. | 31.10. | |||

| bigfm | 17.12. | 09.12. | 08.12. | 07.12. | 06.10. | 06.12. | |||

| bigfmsaar | 09.12. | 08.12. | 07.12. | 06.10. | 06.12. | ||||

| bremennext | 00.01. | 00.01. | 00.01. | 00.01. | 00.01. | 00.01. | 09.11. | ||

| bremenvier | 08.11. | 18.11. | 28.10. | 25.11. | 09.11. | 25.11. | |||

| dasding | 12.11. | 11.11. | 24.11. | 29.11. | |||||

| egofm | 15.12. | 14.12. | 24.12. | 23.12. | 12.12. | 03.12. | |||

| ffh | 26.11. | 25.11. | 27.11. | 26.11. | 24.11. | 01.12. | 01.12. | ||

| ffn | 01.12. | 24.10. | 28.11. | 27.11. | 27.11. | 06.12. | 03.12. | ||

| hitradiortl | 24.12. | 26.11. | 27.11. | 24.10. | |||||

| hr3 | 19.12. | 27.11. | 24.11. | 10.11. | 05.11. | ||||

| jamfm | 26.11. | 16.12. | 14.12. | ||||||

| kissfm | 02.12. | 24.12. | 24.12. | ||||||

| mdrjump | 29.11. | 27.11. | 26.11. | 21.11. | 27.11. | 29.11. | |||

| ndr2 | 03.12. | 16.11. | 29.11. | 24.11. | 24.11. | 25.11. | 03.12. | 26.11. | |

| njoy | 17.11. | 17.10. | 28.11. | 24.11. | 17.11. | 01.12. | |||

| planet | 17.12. | 15.12. | 11.12. | 03.12. | 29.11. | 01.12. | 05.11. | ||

| puls | 18.12. | 04.12. | 09.11. | 07.11. | 24.12. | ||||

| radio91.2 | 24.11. | 28.11. | 07.11. | 15.11. | |||||

| rpr1 | 27.11. | 01.12. | 22.10. | 09.11. | 28.11. | 01.12. | |||

| rsh | 22.12. | 26.11. | 24.11. | 10.11. | 29.11. | ||||

| rtl | 30.11. | 25.11. | 27.11. | 25.11. | |||||

| spreeradio | 29.11. | 28.11. | 27.11. | 25.11. | |||||

| sr1 | 26.11. | 02.12. | 10.11. | 29.11. | |||||

| swr3 | 01.12. | 14.11. | 02.12. | 24.11. | 02.11. | 02.11. | |||

| top40 | 23.12. | 24.11. | 11.11. | ||||||

| unserding | 11.11. | ||||||||

| wdr2 | 20.12. | 24.11. | 19.11. | 18.11. | 05.10. | ||||

| youfm | 23.12. | 06.11. | 25.11. | 06.12. | 03.12. |